In this article, we will be looking at three different methods of Autoscaling applications. We’ll also try to leverage AWS manged services as much as possible.

Scaling is when similar resources are added or removed depending on demand, this is usually done manually. An Autoscaling application will monitor key performance metrics and when these cross certain thresholds, either scale out (add similar resources) or scale in (remove similar resources) to meet demand.

Compared to scaling, Autoscaling can happen almost instantaneously depending on the setup. This can help maintain Service Level Agreements (SLAs) by having a Highly Available (HA) setup spread across more than 1 geographic location.

Some definitions that you might find around scaling include:

-

Scale out – Addition of similar resource to meet demand

-

Scale in – Removal of similar resource to meet demand

-

Autoscaling Group – A group of similar resources that are/will be Autoscaled

-

Desired capacity – This is the count of resources in the Autoscaling Group

-

Max and min capacity – These define the limits for the number of resources that Autoscaling can add or remove from the Autoscaling group so that it meets demand.

-

Scalable Dimension – The dimension of the resource that will be used to scale the Autoscaling group, if the resource is a service, this might be the CPU usage or network in.

-

Scale in and scale out cool down – The amount of timebefore another scale in or scale out can take place

-

Health Check – A Health Check is an API call to the application/resource to determine if it can still receive traffic. If it cannot, it will be marked as unhealthy and removed. This is usually with regard to the Load Balancer that sends traffic to the individual resources in the Autoscaling group.

-

Health Check grace period – This gives the resource/application time to start up, only after this time will the checks begin

It is important to note that not any application can use autoscaling. The application must be designed with scaling kept in mind. It must be stateless to handle new resources and any failures that might prevent your High Available setup from keeping its SLAs.

With most of the terminology out of the way, let’s start looking at our examples. They are ordered by their age and ability to scale.

1. Elastic beanstalk

The CloudFormation template can be found here:

https://github.com/rehanvdm/awsautoscaling/blob/master/ElasticBeanstalk/cf.yaml

Elastic Beanstalk (EB) is one of the earliest AWS orchestration services. It is easy to configure and orchestrates a lot of other AWS services like EC2, SQS, RDS, S3 SNS, Autoscaling, Cloudwatch Alarms, Loadbalancer, etc to bring your whole application together as one. Under the hood, the configuration provided to Elastic Beanstalk writes a CloudFormation template to orchestrate an manage all these services for you.

Elastic Beanstalk is often overlooked. It is still a great entry point for anyone starting out on AWS, some might argue that it is outdated and should not be used. It is just so easy to do complex setups while requiring little knowledge of the underlying infrastructure; this is to me is not a sign of age but of maturity. That is why Elastic Beanstalk is first on the list, it is old and wise, perfect for large websites (my opinion) and shouldn’t be used for any compute intensive work.

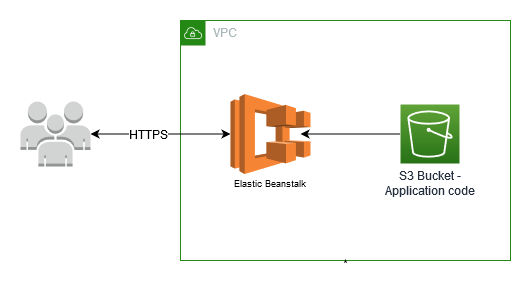

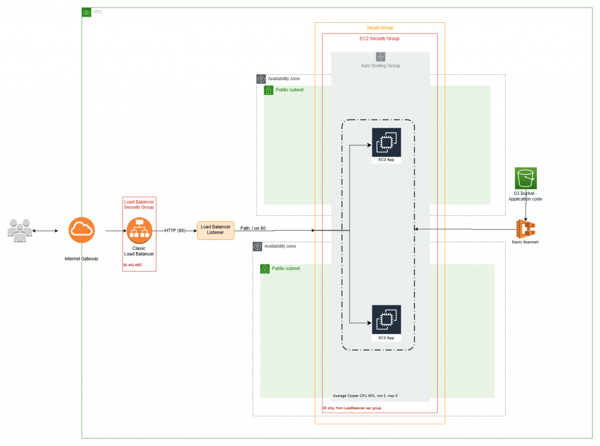

The architecture above visualizes the CloudFormation template to define an HA Elastic Beanstalk environment and comes in under 85 lines of YAML. Elastic Beanstalk then creates and manages a much bigger CloudFormation template on your behalf that is about 2500 lines, containing more than 15 resources that are about 1500 lines long. The gist of this architecture can be seen below.

Important points to mention here is that you get a Classic Load balancer that balances over your subnets which could be either in public or private depending on your external VPC setup. Then Elastic Beanstalk creates all the Security Groups, Classic Load Balancer, Target Group, Autoscaling groups, etc.

It will pull the ZIP code from S3 and deploy it to the Elastic Beanstalk environment. Each environment has specific configuration options depending on what programming language you choose. Then Elastic Beanstalk also has script hooks that can be used to setup the instance and container. These can be easily customized to install all kinds of applications and create custom configurations on the actual instances and . Also noteworthy is the Elastic Beanstalk CLI that has many features and commands which can be extremely helpful when migrating to Elastic Beanstalk.

Many may start off with Elastic Beanstalk and then later move to a pure EC2 Autoscaling or ECS. This is to get extra benefits that Elastic Beanstalk cannot provide out of the box. Some of these include; making use of Spot Instances to optimize cost, multi-region deployments and reusing resources like sharing a load balancer for multiple apps.

The whole point of Elastic Beanstalk is to abstract complexity away from you, so that you can only bring your application in a ZIP and then have it autoscaled over multi AZs with minimal setup and knowledge of the underlying infrastructure.

2. ECS Fargate

The CloudFormation template can be found here:

https://github.com/rehanvdm/awsautoscaling/blob/master/Fargate/cf.yaml

Elastic Container Service (ECS) is one of the options AWS provides for running your containers. ECS offers two modes of operation where you manage the underlying EC2 instances that run your Docker images yourself or let AWS do it for you, the latter is known as ECS Fargate. Fargate only cares about how much resources (CPU, memory, etc) and the number of container applications it needs to create for a service.

“Fargate manages the underlying EC2 host instances and container placement.”

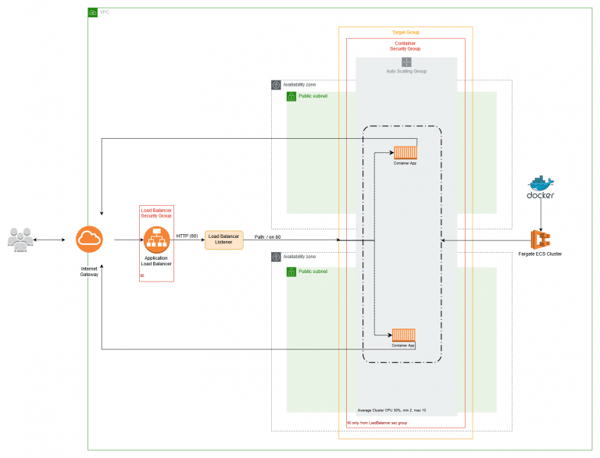

Fargate like Elastic Beanstalk can be run with or without Autoscaling but is more complex than the Elastic Beanstalk implementation, this is because we need to define every resource manually. The CloudFormation template specifies 14 resources to run a minimal Fargate setup, coming in at around 200 lines of code without comments. The architecture diagram can be seen below.

It resembles much of the same components as Elastic Beanstalk. The major differences being that; the applications run on containers orchestrated by ECS Fargate and that the application is a now created from a Docker image. Fargate also requires a load balancer to distribute traffic to the service, which is the grouping of individual Docker applications running that may scale in and out depending on demand.

Fargate is great when you already have your applications dockerized and do not want to manage container hosts. Cost-wise it is also perfect for spikey and underutilized Docker applications that might not use the full EC2 instance host. When you have high throughput, constant resource intensive applications that can be tightly packed on a single instance, then ECS will be the better option.

3. Application Load Balancer and Lambda

The CloudFormation template can be found here:

https://github.com/rehanvdm/awsautoscaling/blob/master/LambdaALB/cf.yaml

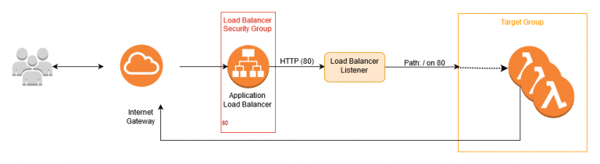

We are sticking with the load balancer approach as the previous solutions have. AWS Lambda could just as well have been invoked by API Gateway, which is the most popular method to call Lambda. Both invocation methods scale Lambda near real-time, depending on the configuration. AWS Lambda scales the fastest by far but has its own unique set of limitations.

*Note the architecture diagram excludes any mention of VPCs on purpose, that is a bit out of scope for this topic.

API Gateway is the most cost-effective when you have spikey, low usage for the API. While the Application Load Balancer (ALB) trumps API Gateway when the API requires high/constant throughput.

API Gateway offers other sidecars like authentication, VTL templates, stages, usage plans and throttling that ALB does not. ALB also has its strengths, the biggest being that a connection is not limited to 30 seconds like API Gateway but rather the Lambda limit of 15 minutes.

Whether ALB or API Gateway is used, the Lambda compute engine is using the Firecracker (blazing fast micro VMs) which starts and scales almost instantly. Lambda definitely requires the least amount of setup and hardware babysitting compared to the other solutions. This can be seen when looking at the CloudFormation template needed to define the minimal setup for ALB and Lambda. The ALB and Lambda CloudFormation template comes in under 100 lines of YAML and requires only 6 other AWS resources compared to the more than 15 resources the other two methods required.

Tests

It is a bit difficult to test and compare 3 different architectures against each other, each with their own unique set of parameters. A simple load test is done with Artillery, which can also be found in the Github repo. The test just calls /fibonaci.php for 10 minutes doing 4 calls per second. The result can be seen in CloudWatch Metrics, depending on the solution you can see EC2 Instances for EB and Tasks for Fargate scaling in and out.

For Elastic Beanstalk and Fargate the outcome is pretty close. Elastic Beanstalk is slower because it needs to create the actual EC2 instance and do a bunch of things to get the instance and container within ready. Baked AMIs will see significant improvement here, this is the recommended process for EC2 Autoscaling. Fargate also uses the same Firecracker micro VMs as Lambda, so they pull and deploy a docker image much faster than what Elastic Beanstalk can do with EC2 instances.

Lambda is obviously the winner when it comes to scale in and scale out timing. It scales near real-time, to be honest, it still baffles me that on a cold start it can pull and deploy your code sub-second (for this repo example +- 500ms).

TL;DR Choose a scaling method that suits your application. Lambda will always scale the fastest.

Summary

The table below gives a quick summary of what we discussed.

|

Complexity |

Extensibility |

Compute Engine |

Time to Scale |

|

|

Elastic Beanstalk |

Easy |

Moderate |

Servers |

+++++++++ |

|

ECS Fargate |

Moderate – High |

High |

Docker |

+++ |

|

Lambda |

Low – Moderate |

High |

Serverless Lambda |

+ |

-

Elastic Beanstalk is a good fit if you have legacy apps requiring scaling with minimal knowledge of AWS.

-

Use ECS Fargate if your apps are already dockerized and you don’t already have too many sidecars helping with docker orchestration.

-

AWS Lambda will be the best choice If you are starting something from scratch that has low to moderate traffic. It has other limitations that need to be kept in mind though.